Product Manager’s Guide to Solving Real Problems: A Case Study in Action

This case study shows work of mine I originally put together as part of my interview at Tripleseat. The ideas presented here are based entirely on publicly available information, my own research on event planning professionals, and it reflects my own product thinking.

I’m sharing this to illustrate my approach to identifying user pain points, exploring AI-powered workflow improvements, and to showcase my product craft. None of the information shared here reflects proprietary or internal knowledge from Tripleseat.

Prompt:

The prompt I explored centered around one big challenge: how might we reduce the burden of email for event planners and improve data accuracy in event management platforms?

Case Study

I love the concept in Marty Cagan’s Inspired that a company needs “Teams of missionaries, not a team of mercenaries.” As in, product managers should develop a vision for their product and sell the outcomes and benefits, not just a group of people who go ahead and build whatever they’re told.

Our goal is to drive value with thoughtful, outcome-driven solutions. And you probably know that’s what you need to do. But how? Cagan’s book gives a lot of techniques for discovery, and stakeholder alignment, testing prototypes and taking a product to market with continual refinement. The book is somewhat lacking in the art of argumentation — especially when it comes to converting your discovery research into product pitches.

In this guide, I’ll walk you through how I approached a real-world problem. I’m going to use my experience pitching a product to Tripleseat as a case study. Along the way, I’ll share how I used AI to sharpen my scope and tighten feedback loops, all based on publicly available information.

I hope this guide helps you feel like you can connect the dots between your research and the solution you narrow in on — the solution that eventually becomes the product you launch.

1. Identifying the Problem: Research is Key

Every great product starts with a well-defined problem.

At Tripleseat, the problem was clear: Event planners were spending too much time managing email communication, which led to costly errors.

But I’m going to spell this out — email is not the problem.

Defining the problem should always begin with empathy. I started by asking, Why do event planners rely on email? Why is this most used communication method when it is so error-prone? Why not use, for example, a big ass intake form, or a client portal, or some other way?

What I uncovered in my research? Planners use email for their clients. Planners have to manage a lot of stakeholders, and decisions are often getting made on a rolling basis. Email is mobile, transactional, inexpensive/cheap, and easy enough to use.

The problem is not email — it’s getting event details out of email and into your event planning software (aka Tripleseat) which can cause human error and expensive mistakes, especially when event details are missed.

How to: Always validate your assumptions with real data and research. This helps ensure that you're tackling the right problem before jumping to solutions.

To narrow in on the problem, I conducted research using publicly available information like industry reports, user feedback, and competitor analysis. This research allowed me to truly understand the pain points and avoid assumptions.

Key Takeaway:

Defining the problem should begin with empathy, then do your research.

When building slides, your “Problems Slide” can be more qualitative, and the impact more high-level.

2. Framing the Opportunity: Quantify that thang

If the problem is the cluster###k of getting event details out of email, then the next step is to quantify the impact of the problem on the Planner’s business. Remember, as product managers, we aren’t just building features — we are trying to drive business outcomes. That calculation is is your way of measuring the impact on businesses.

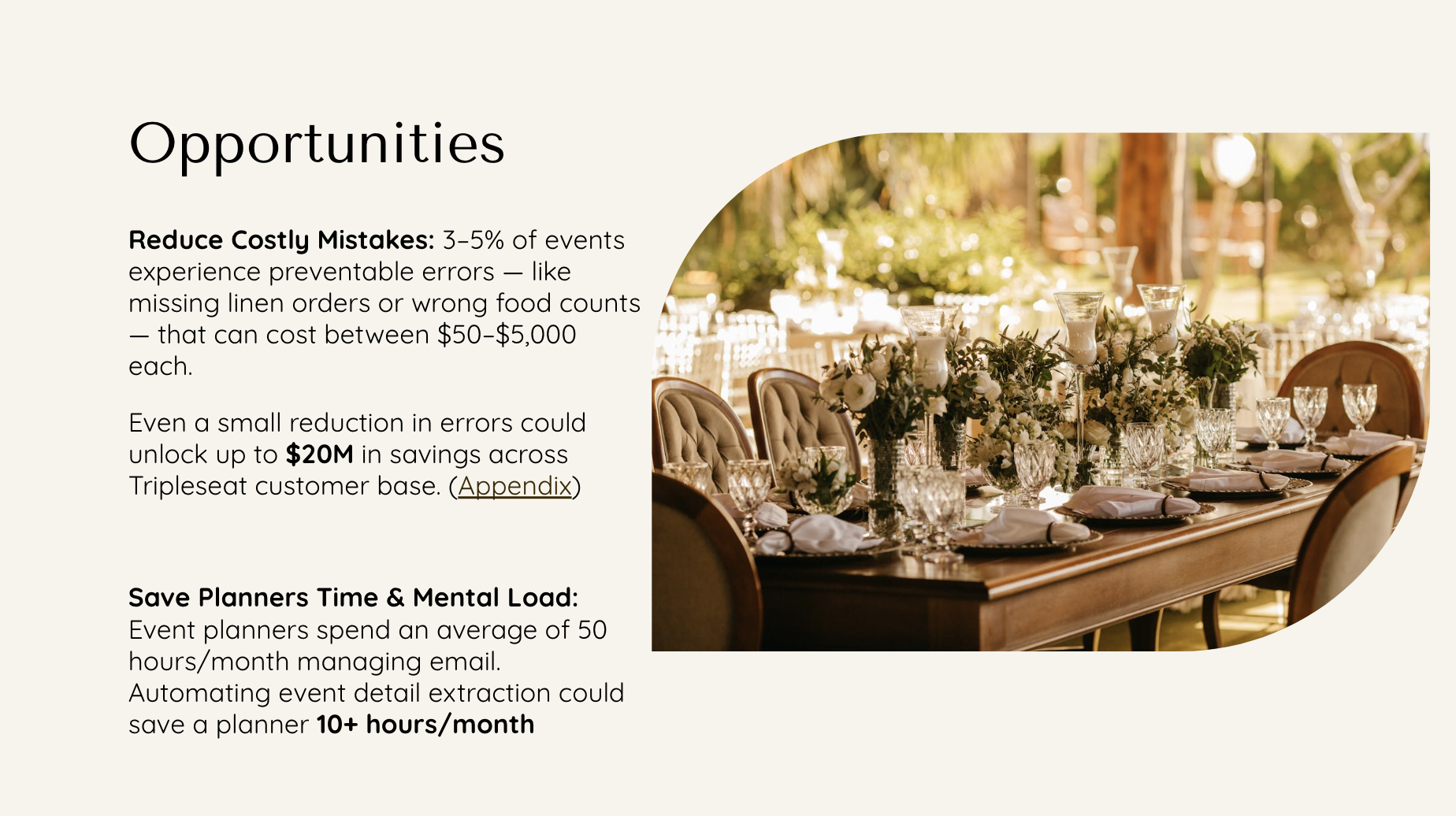

How to: From industry benchmarks I found online, I learned that planners budget for 3-5% of events to experience some sort of preventable errors, costing between $50 and $5,000 per mistake. By reducing these errors, I estimated that Tripleseat could save millions across its customer base. This gave the product a clear business case and a strong argument for moving forward.

Again, center your customer empathy. Email is not the enemy. The quantity of details and expensive mistakes are the problem. I was reading online that Event Planners are reported to have one of the most stressful jobs — somewhere close behind fire fighters. When you look at their workflows, it’s like, uhhh yeah!!!

So the second opportunity I tried to quantify here was saving Planners’ time and mental load: saving just a few minutes per email could save 10+ hours/month. Time that can be reinvested in business.

Key Takeaways:

A solid business case is essential. Quantifying the value of a product helps align stakeholders and demonstrate the impact of your solution.

What makes a good business case? It depends on your industry and your users, but because revenue and operational costs are typically the biggest levers in B2B SaaS, I’d say you’re usually in the right place if you’re looking at a number that starts with a $$, or it’s a number that ends with a time unit (minutes, hours, days, months, etc).

This isn’t easy work. Wait til I get to my appendix. Digging into this calculation was where I spent probably the most time in preparing my product pitch.

3. Defining User Stories: Thinking B2B2C

Product managers often focus on either the business (B2B) or the consumer (B2C), but in B2B2C models, you need to think about both sides when you’re coming up with a solution. I highly recommend making your user stories reflect these needs and dynamics — especially if the two might be at odds with each other.

In Tripleseat’s case, I defined user stories from both the event planner’s perspective and their client’s perspective. This helped me narrow in on a solution that would benefit both the customer and the end-user.

Key Takeaway:

Always remember to balance the needs of both the business and the end consumer. This ensures that your solution delivers value at all levels.

4. Wireframing: A Simple Flow

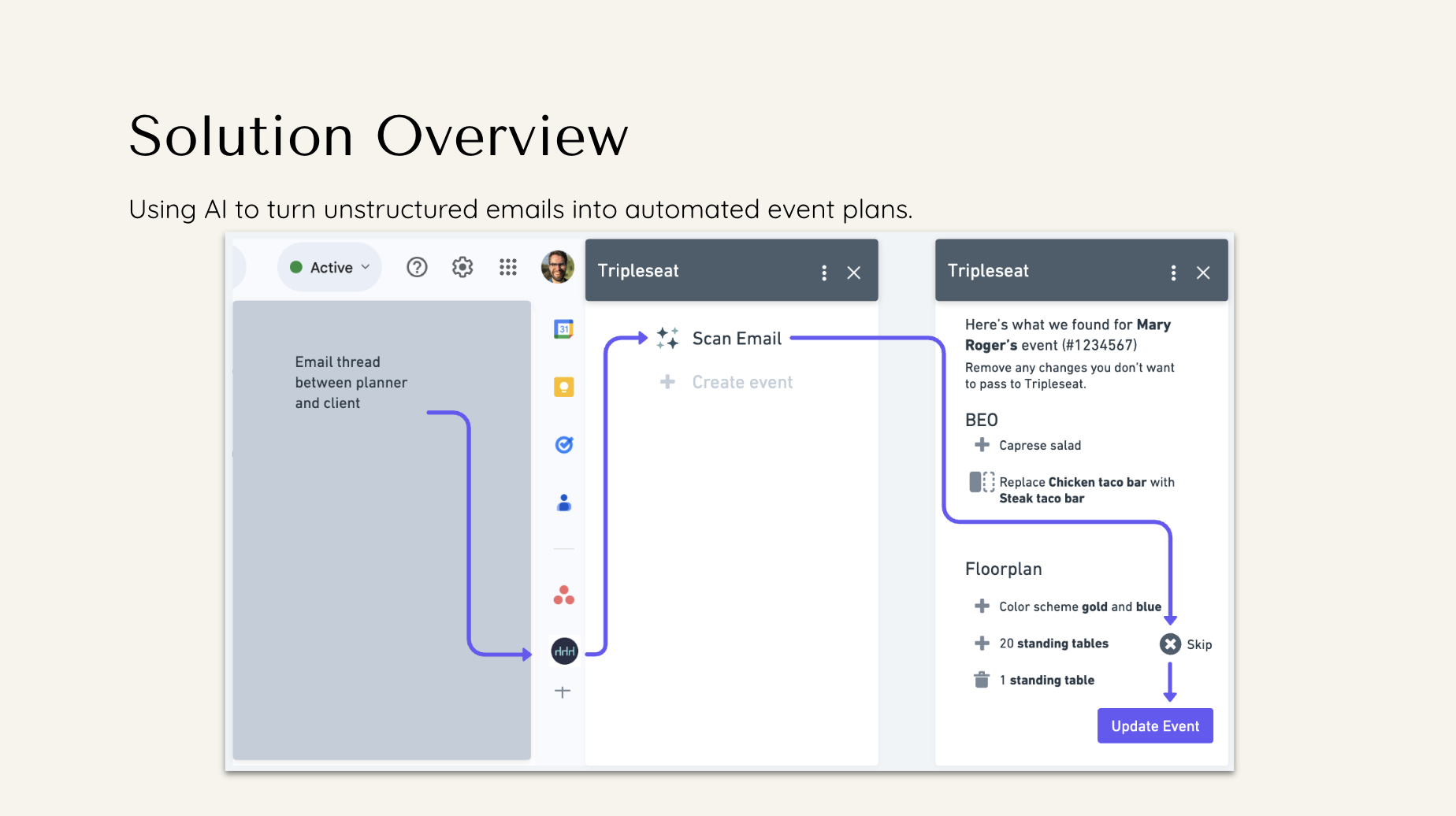

Once the problem, opportunity, and user stories were defined, I created a simple wireframe in Whimsical to outline the solution.

The goal was to keep the wireframe as simple as possible — show the functionality in three steps — so I had enough to describe the happy path without creating distractions. I was able to communicate that this was just the beginning, and there were plenty of opportunities for how we could build on this initial idea.

Key Takeaway: Start simple, but leave room for future growth. This makes it easier to iterate and scale as you gather more data.

5. Defining Success Metrics & Diagnostic Metrics

Measuring success is crucial for any product. I defined both success and diagnostic metrics for the Tripleseat product so that I could continue optimize the product post-launch.

This might be worth dwelling on for a moment. Because choosing your success metrics can be tricky, especially when the problems I was trying to solve (miscommunication, mistakes, mental load) don’t leave clear digital footprints.

So here’s how I thought about it: if I see users continue to engage and expand their usage over time, I can prove we’re solving customer needs. And then, I can make sure I align these success metrics to these problems with a mix of quantitative data and qualitative research that can be conducted before building, and after launch.

Success metrics:

Demand - Before building anything, validate there is demand. Consider a fake door test in Event details page in Tripleseat. A button like “Scan emails for event details” or “AI Assistant - capture event details" from your gmail”

Adoption - Once the feature is launched, is adoption meeting the demand? Weekly active users can help show how feature is being used.

Error margin - For users who adopt the feature, what is their error margin before and after using the scanner? Are fewer mistakes made per year? This would require some qualitative and quantitative research with pilot group, as well as some ongoing case studies.

Diagnostic metrics

Retention - understand the trust and tenure of users.

Accuracy - Post launch, I want to understand the accuracy of the tool with real world usage. A way to test this is, How frequently do users need to correct mistakes made by the email scanner?

Together, these metrics show we are going in the right direction throughout the product development lifecycle. These metrics would help me go from 0 to 1 to 2.

Key Takeaway:

Metrics are your roadmap. They help you track progress, identify areas for improvement, and ensure your product delivers real value.

For problems that don’t have clear digital footprints, you can use mixed methods to make sure your customers engagement is aligned with the problems you’re solving.

A Robust Appendix: What I left out

To keep the pitch within the allotted time (7-10 min) there’s of course a lot I had to leave out.

So I included an appendix that detailed all my research findings, timelines, tshirt sizes. This was a way to back up my decisions and show that the solution wasn’t just theoretical — it was based on data, user insights, and a clear business case.

Future directions

Experimentation & Hypothesis Validation

Additional Open Questions

What’s the baseline cost of a mistake?

Analysis of Error Margin

Delivery Timeline

Overview of Google Workspace Integration

NLP Training

Key Takeaway:

In the end, this pitch/case study didn’t have a Q&A. After the pitch, we switched into a traditional interview format. But I have had interviews Always include supporting evidence for your decisions. This not only builds credibility but also helps stakeholders understand how you arrived at your solution.

Some closing thoughts

Product planning with AI: Sharper scope and tighter feedback loops

While this wasn’t my first stab at using AI in product planning, this case study gave me a chance to really stretch myself and put together an even stronger pitch - faster than I might have been able without. Reflecting on this exercise, AI was amazing for my productivity.

As a PM, I’m pretty collaborative. This felt like having a conversation with a colleague.

ChatGPT wasn’t able to tell me the answer. It shot out a couple ideas, but I’d say they were kind of crappy if I’m being honest. Like, ChatGPT wasn’t able to grasp the problem or opportunity or user dynamics until I really was feeding a lot of research in there. But once I had my aha moment, I was able to use AI to advance my goals.

I think by really clearly defining users, problems, assumptions, I was able to form strong user stories from the beginning — stories that captured the dynamic between Planner and Client.

So how exactly did AI play a role in my product planning?

Sharpen scope + pitch: Structured a compelling product pitch, helped me articulate the user problem, opportunity, solution scope, and future direction — all within a tight 8-minute format.

Math + Modeling: ChatGPT helped me quantify certain business impacts — especially when trying to model the costs of mistakes an event planner might make. But it also helped me formulate adoption benchmarks, beta test sizing to support a strong ROI narrative.

Risk assessment: One of the biggest challenges in product development is managing risk. There are usually knowns, known unknowns and unknown unknowns. ChatGPT helped me assess technical feasibility and risks, which helped me compare implementation paths (Gmail add-on vs. in-app inbox), understand Google Workspace limitations.

Timeline estimates: AI helped outline realistic timeline estimates, using t-shirt sizing for NLP training, integration scope, and developer ramp-up — giving you confidence in your MVP roadmap. What really knocked my socks of was it even helped me estimate timelines based on different dev familiarity and skillset.

Key Takeaway:

AI can be a powerful tool for narrowing down scope, tightening your argument, and speeding up feedback loops.

Don’t just use it for the end solution — use it to refine the process itself.

What would I have done differently?

I really expected to have a robust Q&A following my pitch, but we didn’t. So while I was prepared to talk in depth about the different workstreams, milestones, etc, I wish I would have included in my pitch then was something about the timeline and rollout management.

Disclaimer (again)

This research was done using publicly available information, and while it offers insights into how I think through product development, it should be understood that all data and research are derived from external, non-proprietary sources.